AI agents performing live orchestration for integration are exciting and can be a game changer.

Until you start considering mission-critical use cases, such as financial transactions, purchase order workflows, and regulatory compliance, these are high-stakes, heavily governed processes where reliability is non-negotiable. In these scenarios, the same autonomy that drives innovation elsewhere can become a liability. A misinterpreted compliance rule, a hallucination, a failure to complete the task, and suddenly, the benefits of autonomous systems are eclipsed by the cost of failure.

But it doesn’t have to be this way. What if, instead of letting AI agents improvise at runtime, you had them design, build, and evolve integrations “as Code”, leveraging existing DevOps processes companies already have in place and trust?

This represents a different expression of autonomy—one that happens before execution, not during it. And it’s this distinction that unlocks a systematic way to scale agentic workflows across the enterprise.

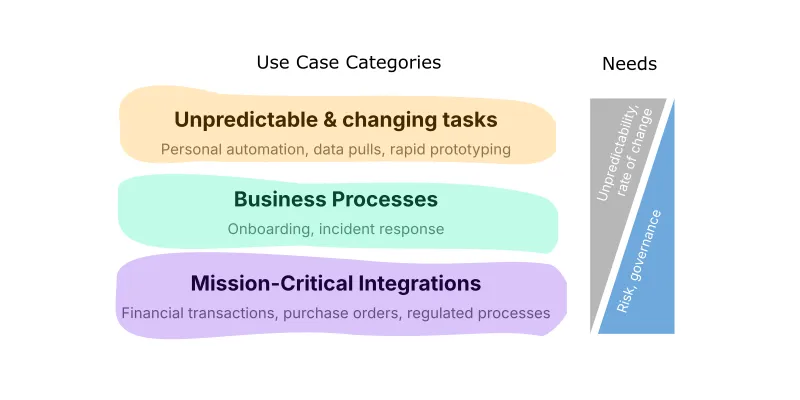

The Use Case Reality: Different Business Scenarios Have Different Needs

Not all integration problems are created equal. Use cases in real world environments vary widely in speed, predictability, and acceptable risk. And the way you apply autonomy must reflect that.

Before deciding how AI agents should behave, it’s essential first to understand the nature of the business scenario you’re solving.

Unplanned Task: Improvisation Over Control

- Examples: Marketing campaign data pulls, chatbot integrations, rapid prototyping, repetitive tasks, user-triggered automations.

- Characteristics: Spontaneous, exploratory, creative, interactive, isolated tasks that require quick, one-off solutions.

- What success looks like: Value comes from moving fast and iterating. Solutions don’t need to be perfect; they need to be useful now. Tasks may differ every time, and success is measured by speed, utility, and how easily a human can course-correct.

- Risk tolerance: High. Mistakes are expected and easily corrected. Human validation ensures safety and accuracy.

Business Processes: Adaptability Inside Structure

- Examples: Customer onboarding, supply chain workflows, incident response.

- Characteristics: Multi-step predefined workflows with defined structure. Some steps are semi-predictable. i.e., they have a clear goal but require dynamic decision-making based on changing conditions (e.g., navigating a UI that changes frequently).

- What success looks like: Consistency in structure with flexibility at the step level. The process must remain auditable, with the ability to escalate to a human when logic fails, requires validation, or conditions change too rapidly.

- Risk tolerance: Moderate. Errors can be mitigated through validations and guardrails. Critical decisions and validations may be escalated to humans.

Mission-Critical Integrations: Predictable Execution with Governance

- Examples: Financial transactions, POs, regulated processes, customer data pipelines, APIs.

- Characteristics: Predictable, high-stakes, regulated, auditable, possibly requiring high-volume and low-latency.

- What success looks like: Every execution is expected to succeed. Logic is known and stable. Change is managed through trusted DevOps pipelines, not runtime improvisation. Everything is versioned, tested, monitored, and auditable.

Risk tolerance: Low. Mistakes result in financial loss, compliance violations, or systemic failure.

The Future of Integration in the Era of Autonomous Agents

Imagine a world where agentic AI doesn’t just assist in complex workflows but manages the entire lifecycle with minimal human intervention, from translating human intent into executable logic to orchestrating API calls, applying predefined rules or preset rules, triggering workflows, and maintaining integrations over time.

In this vision, intelligent agents proactively optimize performance, identify and remove bottlenecks, update data sources and existing enterprise systems in real time, and collaborate with other agents across a unified digital ecosystem. This is far beyond traditional automation or robotic process automation. It’s the next wave of intelligent, adaptive, and autonomous integration.

These autonomous agents would continuously monitor data flows, resolve issues, and coordinate tasks to automate complex business processes across multiple systems, ensuring that integrations remain reliable even as existing systems and data evolve. They would utilize HITL (human-in-the-loop) validation to balance autonomy with control, applying tool use responsibly while maintaining human oversight and audibility.

Because these AI agents understand natural language and business context, they can dynamically reconfigure workflows, optimize processes, update logic, and maintain integrations without needing to start from scratch. They can trigger workflows, adjust settings, and synchronize data instantly—delivering operational efficiency on a large scale.

And while these capabilities could replace large swaths of manual integration effort, the real advantage is augmenting human capability: giving teams more time to focus on strategy and innovation. At the same time, agents handle the operational heavy lifting.

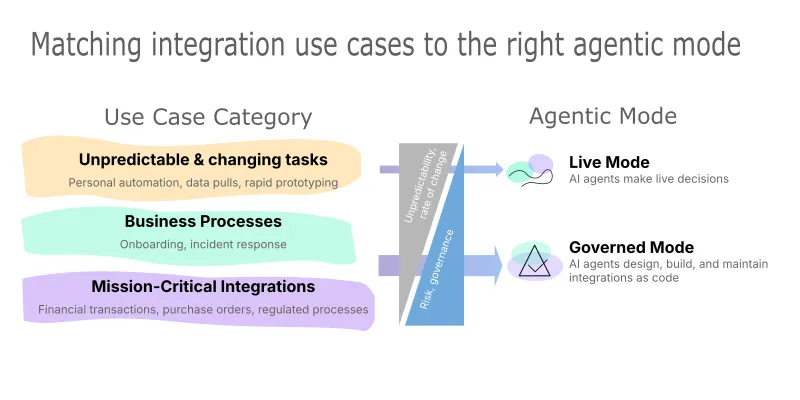

The Agentic Integration Framework: Trusted Autonomy for AI Agents Across Every Use Case

To achieve that future, AI agents must operate differently depending on the scenario. The Agentic Integration Framework defines two distinct agentic modes that handle all three use cases categories, each aligning behavior to business context, risk tolerance, and integration needs.

Live Mode: Autonomous Decisions On the Fly

- How it works: Agents make autonomous decisions at runtime, calling tools, executing steps independently, and adapting live to user input or system behavior.

- Autonomy profile: Creative, responsive, runtime improvisation, iterative.

- Human role: The “Gatekeeper”. Reviews the agent’s actions retrospectively and approves any changes to data or systems.

- Use case fit: Personal automations, virtual assistant, low-risk interactive tasks, rapid prototyping.

- Strategic value: Boosts productivity in low-risk, fast-changing environments where speed matters more than predictability.

Live Mode unleashes creative autonomy where runtime adaptability is more important than predictability.

Governed Mode: Agentic Integration “As Code”

- How it works: AI agents autonomously design, write, test, and maintain integrations “as code”, following best practices, respecting architecture guidelines, enabling auditability, and ensuring transaction integrity. The generated workflows can include steps that make intelligent decisions at runtime when needed.

- Autonomy profile: Predictable execution, auditable decisions, reversible changes, continuously improving.

- Human role: The “Conductor”. Sets strategic direction, defines governance frameworks, reviews and approves changes; ensures compliance, architecture alignment, and reuse; approves deployments.

- Use case fit: Problems with known playbooks and intelligent steps; Mission-critical integrations where runtime risk must be eliminated.

- Strategic value: Delivers full automation for high-stakes, high-volume, low-latency, known or regulated processes while maintaining governance and control.

Governed mode provides full automation that you can control and trust at runtime.

From Hesitation to Strategy: Unlocking Agentic Workflows ROI

There’s uncertainty around using AI agents for integration:

- Confusion over what’s possible and what’s safe

- Temptation to automate everything

- Hesitation from risk, lack of governance, and failed PoCs

Without a strategy, companies either get stuck in endless experimentation or leap into high-risk solutions with blind trust and no control.

The Agentic Integration Framework offers a repeatable method for deploying AI agents safely and strategically across your organization:

- Make informed decisions about where and how to use autonomy

- Align agentic AI behavior to business goals, risk, compliance, and technical constraints

- Scale intelligent automation without sacrificing control

The next phase of agentic AI integration requires:

- Application with intent: Each mode is mapped to the proper use case

- Democratized access: Anyone, regardless of technical background, can build and use integrations

- Right-time-and-depth collaboration: Humans and AI agents working together at the right moments, with context and escalation handling

- Controlled visibility: Unified, context-aware control planes personalized by use case, user, and autonomy mode

The Agentic Integration Framework is the first step toward realizing that vision, helping enterprises unlock the benefits of autonomy without losing control.

Conclusions

The AI automation landscape has been plagued by confusion, driven by mixed success stories, overhyped results, massive potential impact, significant risks when AI goes wrong, and failed proof-of-concepts.

Until now, the conversation has been one-sided, focusing solely on AI agents automating tasks live without addressing how to manage AI agents' accuracy issues and inherent risks.

The Agentic Integration Framework finally provides a trusted path forward, enabling organizations to use AI for automating integrations across business use cases with the control, governance, and reliability that enterprise success demands.

Ready to shape how agentic AI delivers ROI in real-world integrations?

Subscribe to get notifications of new posts and get early access to upcoming examples, guides, and implementation tools.